Breaking Down the Submissions Given the well documented irregularities throughout the comment submission process, it was clear from the start that the data was going to be duplicative and messy. If I wanted to do the analysis without having to set up the tools and infrastructure typically used for “big data,” I needed to break down the 22M+ comments and 60GB+ worth of text data and metadata into smaller pieces.⁴ Thus, I tallied up the many duplicate comments⁵ and arrived at 2,955,182 unique comments and their respective duplicate counts. I then mapped each comment into semantic space vectors⁶ and ran some clustering algorithms on the meaning of the comments.⁷ This method identified nearly 150 clusters of comment submission texts of various sizes.⁸ After clustering comment categories and removing duplicates, I found that less than 800,000 of the 22M+ comments submitted to the FCC (3-4%) could be considered truly unique. Here are the top 20 comment ‘campaigns’, accounting for a whopping 17M+ of the 22M+ submissions:

The vast majority of FCC comments were submitted as exact duplicates or as part of letter-writing/spam campaigns.

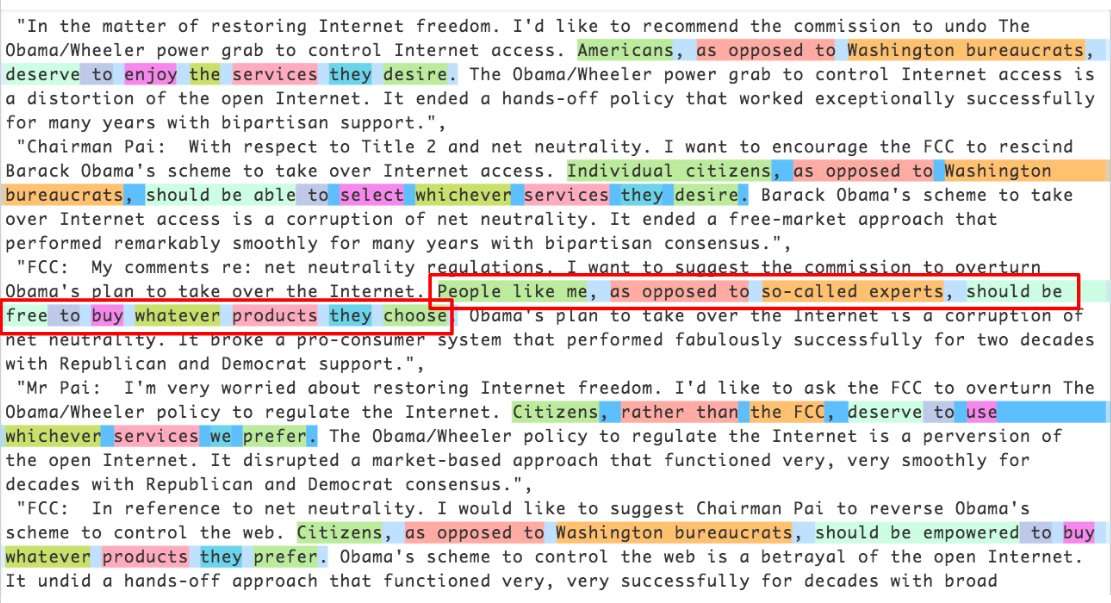

So how do we know which of these are legitimate public mailing campaigns, and which of of these were bots? Identifying 1.3 Million Mail-Merged Spam Comments The first and largest cluster of pro-repeal documents was especially notable. Unlike the other clusters I found (which contained a lot of repetitive language) each of the comments here was unique; however, the tone, language, and meaning across each comment was largely uniform. The language was also a bit stilted. Curious to dig deeper, I used regular expressions⁹ to match up the words in the clustered comments:

I found the term “People like me” particularly ironic.

It turns out that there are 1.3 million of these. Each sentence in the faked comments looks like it was generated by a computer program. A mail merge swapped in a synonym for each term to generate unique-sounding comments.¹⁰ It was like mad-libs, except for astroturf. When laying just five of these side-by-side with highlighting, as above, it’s clear that there’s something fishy going on. But when the comments are scattered among 22+ million, often with vastly different wordings between comment pairs, I can see how it’s hard to catch. Semantic clustering techniques, and not typical string-matching techniques, did a great job at nabbing these. Finally, it was particularly chilling to see these spam comments all in one place, as they are exactly the type of policy arguments and language you expect to see in industry comments on the proposed repeal¹¹, or, these days, in the FCC Commissioner’s own statements lauding the repeal.¹² Pro-Repeal Comments were more Duplicative and in Much Larger Blocks But just because the largest block of pro-repeal submissions turned out to be a premediated and orchestrated spam campaign¹³, it doesn’t necessarily follow that there are many more pro-repeal spambots to be verified, right? As it turns out, the next two highest comments on the list (“In 2015, Chairman Tom Wheeler’s …” and “The unprecedented regulatory power the Obama Administration imposed …”) have already been picked out from previous reporting as possible astroturf as well. Going down the list, each comment cluster/duplicate would need its own investigation, which is beyond the scope of this post. We can, however, still gain an understanding of the distribution of comments by taking a broader view. Reprising the bar chart above breaking down the top FCC comments, let’s look at the top 300 comment campaigns that comprise an astonishing 21M+ of the 22M+ submissions¹⁴:

Keep-Net Neutrality comments were much more likely to deviate from the form letter, and dominated in the long tail.

cheese_is_available on November 24th, 2017 at 09:06 UTC »

Regarding the confidence interval that is over 100% : for such a low incidence of anti-net neutrality comment you should use the wilson score that is used in epidemiology for close to 0 probabilities. It gives from 99,12% to 99,90% pro net neutrality comment with 95% confidence (98,82 to 99,92 with 99% confidence).

import math def wilson_score(pos, n): .. z = 1.96 .. phat = 1.0 * pos / n .. return ( .. phat + z*z/(2*n) - z * math.sqrt((phat*(1-phat)+z*z/(4*n))/n) .. )/(1+z*z/n) .. wilson_score(997,1000) => 0.9912168282105722 1-wilson_score(3,1000) => 0.9989792345945556Ballcuzi on November 24th, 2017 at 07:02 UTC »

Someone takes the time to concisely deconstruct FCC comments and display the results in a scientific manner - and the top comment is "Reddit posts with links to places you can go and have a premade comment, text or even voicemail sent to a congressman"

garnet420 on November 23rd, 2017 at 23:03 UTC »

Tangentially, is there any chance someone can be legally liable for submitting bot comments using "stolen" identity information?