When news broke last month that two elderly patients in Brisbane had been given an incorrect dose of the COVID-19 Pfizer vaccine, a motley assortment of Australian Facebook pages and groups swung into action.

Key points: Anti-vaccine claims continue to circulate on Facebook more than one month after the ban

Anti-vaccine claims continue to circulate on Facebook more than one month after the ban Analysis shows the most popular anti-vaccine groups are getting more attention than ever

Analysis shows the most popular anti-vaccine groups are getting more attention than ever Facebook deleted an anti-vaccine group after being alerted by the ABC

Within minutes, a page with more than 52,000 followers had posted a screenshot of a news headline with superimposed text reading: "And the culling of old people begins".

Another page with more than 30,000 followers cast doubt on whether the dosage had been an accident (it had) and claimed the government had made the COVID vaccine mandatory for Australians (it had not).

The two posts were viewed by thousands, of which 2,600 interacted by liking, commenting or sharing. (None of the pages mentioned that the elderly patients showed no adverse reaction.)

Compared to all the traffic on Facebook, this was a drop in the ocean. And yet the posts were significant because they marked a test for Facebook.

Two weeks earlier, on February 9, the company had promised to "immediately" crack down on COVID-19 misinformation around the world by deleting content that made incorrect claims about the virus and the vaccines.

Among the false statements it would police, it said, was the claim that the vaccine killed people.

And so here was a test: Posts claiming that vaccines kill.

The posts stayed up. Three weeks later, they're still up.

The most-liked comment for one of the posts reads: "Unbelievable what lengths the governments will go to cover up the effects of the deadly jab. It's going to be murder on a grand scale."

As Australia rolls out COVID vaccines, experts have expressed frustration with Facebook for the volume of anti-vaccine claims that continue to circulate on the platform.

Despite Facebook promising to delete pages and groups that repeatedly posted anti-vaccine claims, it appeared to only act after being contacted by the ABC.

On Tuesday this week, the ABC contacted Facebook about its moderation of anti-vaccine claims and referred to three prominent and easy-to-find Australian examples of Facebook pages and groups that appeared to be posting banned claims.

One of these groups, Medical Freedom Australia, had more than 21,000 members and had accrued about half a million interactions from 10,000 posts since May 2020.

By Thursday, it was gone. Facebook had deleted it for repeatedly breaching its misinformation policies.

Today, after this story had been published, Facebook deleted a second page referred to it by the ABC three days earlier.

Protesters at an anti-vaccination rally in Sydney on February 20, 2021.

Australians vs the Agenda had about 30,000 followers and had been growing fast: since the February 9 Facebook policy announcement, the page's followers had increased 30 per cent, or by more than 7,000.

In that time, the page had averaged more than 12,000 daily interactions (likes, comments and shares) on its posts.

That's a very high interaction rate for a relatively small number of followers.

By comparison, the ABC News Facebook page has 100 times more followers but an interaction rate that's only about three times higher.

The page last month organised a public anti-vaccine rally attended by thousands in capital cities around the country.

It had lately been selling branded merchandise, including t-shirts, hats and hoodies.

This is what Facebook shows when a page has been deleted. ( Supplied

Facebook anti-vaccine moderation 'pretty slow at this point'

The anti-vaccine claims Facebook banned on February 9 include:

That COVID-19 is man-made or manufactured

That COVID-19 is man-made or manufactured Wearing a face mask does not help prevent the spread of COVID-19

Wearing a face mask does not help prevent the spread of COVID-19 Vaccines are not effective at preventing the disease they are meant to protect against

Vaccines are not effective at preventing the disease they are meant to protect against It's safer to get the disease than to get the vaccine

It's safer to get the disease than to get the vaccine Vaccines are toxic, dangerous, or cause autism

More than one month later, Facebook pages and groups targeting Australians continue to post claims that appear to breach the ban.

This week, for instance, a page with more than 30,000 followers posted the false claim that the Prime Minister had said COVID-19 was a "trojan horse event for the Great Reset" — a reference to the conspiracy theory that the virus was manufactured in order to force people to be vaccinated with microchips and enslaved.

A post a day earlier had referenced the false claim that the Pfizer vaccine performs "gene therapy" to alter people's DNA.

In recent days, the news that a small number of people developed blood clots after being administered the AstraZeneca vaccine has been seized as evidence of a murderous global conspiracy led by "elites" such as Bill Gates.

The situation in Australia is part of a broader, worldwide problem of anti-vaccine claims circulating on Facebook, according to the global fact-checker organisation First Draft.

The organisation has counted more than 3,200 posts making banned anti-vaccine claims published on Facebook since February 9.

Some of these posts were eventually demoted or deleted, but by then they had already received a lot of interactions, said Esther Chan, First Draft's Australian bureau editor.

"Even if Facebook enforcement is working, it's pretty slow at this point," she said.

"Some posts get actioned on the moment they're posted and then some don't. And then some get taken down eventually. And some are still around."

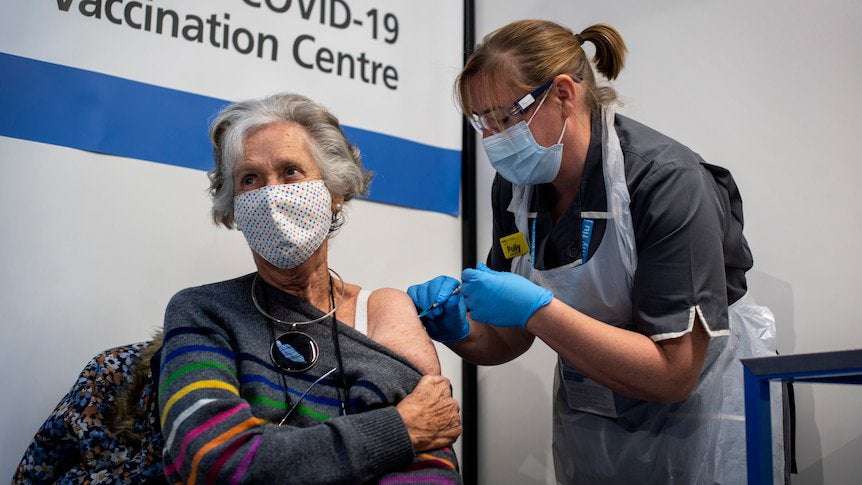

Healthcare workers geting the Pfizer vaccine. ( Getty: Paul Bronstein

In response, Facebook says it's doing a lot behind the scenes.

This includes giving free advertising to governments for public health information and deleting or flagging as misleading tens of millions of anti-vaccine posts worldwide.

It says it demotes anti-vaccine pages, groups and posts so that they reach fewer people, deploys human fact-checkers and AI algorithms, and works with government organisations that hunt out misinformation.

The company says it pulled down almost 12 million pieces of COVID-19 misinformation worldwide between March and October 2020, and applied warning labels to about 167 million pieces of content.

To answer this question, a team of UTS researchers have been studying the amount of attention 12 Australian anti-vaccine pages have been getting over the past 18 months.

They found the 12 pages recorded a steady decline in their aggregate "performance score", made by calculating a moving average of the number of shares per post, and comparing this against a baseline figure.

In other words, they were getting less attention.

This could mean Facebook has been progressively demoting anti-vaccine content in Australia since 2019 and in particular since the beginning of the pandemic in March 2020, said one of the researchers, Dr Francesco Bailo.

"This is compatible with some sort of control on these pages," he said.

"We see that these pages experienced a significant decline. And then basically they plateaued in the second half of 2020."

... but some anti-vaccine pages are getting more attention

But digging a little deeper, the picture gets more complicated.

When the researchers focused on just the best performing pages, they found the aggregated score representing attention went up.

"Instead of a decline, you have an increase in the number of shares," Dr Bailo said.

"And actually, you have a significant increase, especially around the end of 2020 and after January 2021."

In other words, Facebook was apparently not demoting the pages that were getting the most attention.

It also appeared to not be deleting pages; from the sample of 12 pages selected in 2019, all but one were still posting content this month (and the one that wasn't was shut down for unknown reasons).

Ms Chan said she was surprised Facebook was allowing some pages to keep posting.

"At the moment, we have a pandemic going on. If there are a lot of people sharing the opinion the vaccine will kill you, then a lot of people are not going to get vaccinated," she said.

The subtle art of avoiding moderation

Some of Facebook's difficulty with moderating anti-vaccine claims can be put down to the complexity of language itself.

While a bot can easily detect, say, nudity in an image, it has a harder time understanding the way that an innocuous phrase can be loaded with meaning in a certain context.

Anti-vaccine pages appear to have become adept at circumventing moderation by posting questions, rather than making explicit claims.

Other times, they'll post a news headline, but leave out crucial context.

"Every time there's some reports about vaccines, they will always put a negative spin to it," Ms Chan said.

"A lot of these posts are very cleverly crafted in a way that it sounds like their opinions, or just someone's comment."

For example, this week's news that prosecutors in Italy have launched a manslaughter case after a man died shortly after getting the AstraZeneca vaccine was widely reported on anti-vaccine pages and met with predictable outrage.

A popular comment on one Australian page reads: "It's the vaccine. So many people are dying & most have bad side affects (sic). Its (sic) a man made (sic) virus & all involved including the WHO UN WEF should all be charged with genocide."

But the crucial follow-up news was not reported on these pages: a subsequent autopsy had found the man died of a heart attack and there was no evidence of blood clots or any other link to the AstraZeneca vaccine.

The rollout of the AstraZeneca vaccine has been temporarily halted in some countries. ( Getty: Darren Staples

This type of malinformation (information taken out of context with malicious intent) is not only hard to detect, but puts Facebook in an awkward bind: let it go or enter the murky world of context and imputation.

In a statement, Facebook Australia's head of public policy, Josh Machin, said misinformation was a "highly adversarial and ever-evolving space".

"Which is why we continue to consult with outside experts, grow our fact-checking program and improve our internal technical capabilities," he said.

"We will remove false claims about the safety, efficacy, ingredients or side effects of the vaccine, including conspiracy theories, and continue to ban ads that discourage vaccines, and remove COVID-19 misinformation that could lead to imminent physical harm."

Whether Facebook can control its own platform remains to be seen.

Experts like Ms Chan have their doubts.

"I just want to know, what is not working?" Ms Chan said.

"Is it the algorithm? Is it the human editor? Or is it just the platform itself?

"The platform itself wasn't built to be moderated. It was supposed to be just a place where everyone can say what they can.

"Even after all these years, maybe they're still not equipped to do it?"

MasterKaen on March 19th, 2021 at 00:24 UTC »

Maybe we should stop relying on Mark Zuckerberg to regulate civil society.

CptChrnckls on March 18th, 2021 at 23:46 UTC »

Facebook is trash, I’ve been clean since July 2020 and the mental health difference has been huge. You don’t need it, we existed as a society for years without it. I used to think “well I get to keep in touch with people I don’t see”, nonsense. If those folks are that impactful on your life you’d be keeping in touch with them in a more meaningful way. Flush fuckerburg down the toilet.

nah_champa on March 18th, 2021 at 23:08 UTC »

FB didn't ban Q either. They just say some words and pretend they've done something. The groups just change their names. FB makes $$$ from "engagement" and these kind of groups have lots of engagement.